3D Modeling Workflows: DCC, AI, Scanning, and Hybrid

What are the main 3D modeling workflows?

The main 3D modeling workflows are Digital Content Creation (DCC), Artificial Intelligence (AI), 3D Scanning, and Hybrid approaches, each comprising distinct procedural steps for transforming conceptual designs into tangible 3D models. A 3D modeling workflow constitutes the artist’s systematic production methodology for creating digital assets, encompassing all production stages from initial concept sketches through intermediate modeling and texturing phases to final production-ready deliverables. Artists and production managers must select the appropriate workflow methodology based on what the project requires:

- Available budgetary resources

- Production timeline constraints

- Required output quality specifications for final deliverables

The 3D mapping and modeling market demonstrates a projected compound annual growth rate (CAGR) of 16.8% spanning the forecast period from 2024 to 2030, indicating accelerating adoption of three-dimensional technologies across diverse industry sectors including:

- Video game development

- Film production

- Architectural visualization

- Engineering design

- Product development

- Healthcare diagnostic applications

The 3D modeling industry progressively transitions from predominantly manual Digital Content Creation (DCC) methods toward increasingly automated and hybrid workflow approaches which integrate emerging technologies including artificial intelligence, machine learning algorithms, and advanced procedural generation systems.

Digital Content Creation (DCC) Workflows

Digital Content Creation (DCC) workflows constitute the traditional foundational methodology of 3D asset production, representing the industry-standard approach that has established production practices since the emergence of professional computer graphics in the 1990s. 3D artists utilize specialized DCC software applications that provide comprehensive control over core production processes including:

- Manual geometric modeling

- UV mapping

- Texture painting

- Skeletal rigging systems

- Keyframe animation workflows

Artists should select DCC tools when projects require precise vertex-level control over every geometric element including vertex positioning, edge flow topology, and surface normal orientation to achieve specific artistic vision or meet exact technical specifications.

| Software | Company | Specialization |

|---|---|---|

| Maya | Autodesk | Character animation and film production |

| Blender | Blender Foundation | Open-source comprehensive 3D suite |

| 3ds Max | Autodesk | Architectural visualization |

| Cinema 4D | Maxon | Motion graphics |

| Houdini | SideFX | Procedural generation and VFX simulation |

The DCC methodology provides artists with comprehensive creative control over fundamental production processes including:

- Topology construction techniques

- UV coordinate layout design

- Material property and shader assignments

- Systematic scene hierarchy organization

DCC workflows prove optimal for:

- Organic character modeling requiring animation-friendly topology

- Precision-engineered hard-surface mechanical designs demanding technical accuracy

- Stylized artistic content necessitating meticulous attention to both aesthetic form and technical function

DCC Production Workflow Process

The DCC production workflow follows these sequential stages:

- Concept Phase - Initiates with concept sketches and photographic reference imagery as foundational visual guidance

- Blockout Phase - Artists create primitive geometry establishing fundamental proportions and spatial relationships

- Detailed Modeling Phase - Artists iteratively refine surface topology and add geometric complexity

- UV Unwrapping - Artists create optimized two-dimensional texture coordinate layouts

- Texturing Phase - Applying diffuse, normal, roughness, and metallic maps alongside material shader network construction

- Final Integration - Offline rendering for final image generation or real-time engine integration

Artists working within DCC production pipelines require investing 500-1000 hours to achieve professional proficiency, mastering:

- Complex application-specific interfaces

- Foundational 3D coordinate mathematics including vector operations and matrix transformations

- Technical competency in essential techniques including:

- Non-destructive modifier stacks

- Computational Boolean operations

- Catmull-Clark subdivision surface modeling

- Node-based procedural generation workflows

DCC methodologies deliver unmatched output quality when projects require non-photorealistic artistic visions creating stylized forms that diverge from photorealistic representation, or when projects demand animation-optimized topology with precisely placed edge loops for character deformation and polygon-efficient mesh structures ensuring real-time rendering performance in game engine deployments.

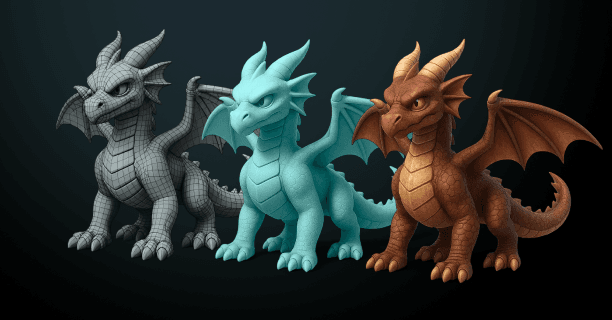

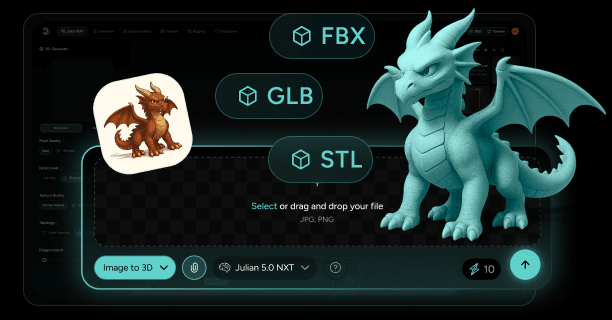

Artificial Intelligence (AI) Workflows

Artificial Intelligence (AI) workflows constitute the most transformative paradigm shift in 3D asset creation, representing recent innovations emerging in the 2020s that leverage machine learning algorithms, neural networks, and generative models to fundamentally reshape production methodologies across the digital content creation industry.

AI workflows employ:

- Machine learning algorithms

- Deep neural network architectures

- Generative models (including GANs, VAEs, and diffusion models)

These technologies automate portions of modeling workflows or synthesize entirely novel 3D assets from diverse input modalities including:

- Natural language text prompts

- Two-dimensional reference images

- Comprehensive training datasets containing millions of existing 3D models

Production teams should implement AI workflows when projects require:

- Rapid prototyping scenarios demanding quick design validation iterations

- Accelerated concept exploration generating multiple aesthetic variations

- Large-scale content production creating thousands to millions of procedural asset variations

AI Technology Categories

AI workflows incorporate multiple specialized technologies:

| Technology Type | Examples | Function |

|---|---|---|

| Text-to-3D Generation | Point-E, Shap-E, DreamFusion | Transform natural language descriptions into geometric models |

| Image-to-Model Conversion | Neural Radiance Fields (NeRFs) | Reconstruct 3D geometry from photographs |

| ML-Enhanced Procedural Generation | Pattern recognition algorithms | Learn from training data |

| AI Texture Synthesis | GANs and diffusion models | Generate high-resolution surface details |

Your workflow usually involves giving input parameters—text prompts describing what you want, reference photos, or constraint specs—then letting the AI system generate initial geometry that you refine through more prompting or manual editing. This cuts the time needed for initial asset creation by 70-90%, turning workflows that used to take hours or days into processes that finish in minutes.

AI workflows shine when you need to:

- Populate environments with assets

- Generate texture variations

- Explore concept iterations

- Create preliminary blockouts that establish spatial relationships before detailed work

The technology keeps getting better at understanding how descriptive language connects to 3D form, letting you generate complex organic structures, architectural variations, or product design alternatives through natural language. Integrating AI into production represents a major shift from pure manual work toward human-machine collaboration, where you handle creative direction and quality control while computers handle geometry generation and pattern copying.

3D Scanning Workflows

3D Scanning workflows capture physical reality through various technologies to create digital versions of existing objects, environments, or anatomical structures. These technologies include:

- Photogrammetry

- Structured light scanning

- Laser measurement (LiDAR)

- Computed tomography (CT)

Use scanning workflows when you need:

- Absolute geometric accuracy

- Authentic surface texture capture

- Documentation of real-world artifacts

This approach works great for:

- Cultural heritage preservation

- Forensic reconstruction

- Medical imaging

- Reverse engineering manufactured parts

- Creating photorealistic digital doubles of actors, products, or architectural spaces

Scanning Workflow Process

Your scanning workflow starts with physical data acquisition, where specialized hardware captures millions of spatial coordinates that define surface geometry along with color or texture information.

| Scanning Method | Accuracy | Range | Best Use Case |

|---|---|---|---|

| Photogrammetry | Variable | Unlimited | Large environments, objects |

| Structured Light | 0.01mm to 0.1mm | Close range | Small to medium objects |

| LiDAR | Centimeter precision | Up to 1000 meters | Large environments, buildings |

| CT Scanning | Sub-millimeter | Internal structures | Medical, industrial inspection |

The raw captured data usually contains noise, redundant info, and gaps that need processing stages like:

- Point cloud filtering

- Mesh reconstruction

- Topology optimization

- Texture baking

- Scale calibration

Scanning workflows create assets with built-in authenticity and surface detail complexity that would take forever to recreate manually, capturing:

- Tiny surface variations

- Weathering patterns

- Material properties that make photorealistic rendering possible

Scanning workflows work best when:

- Clients demand verifiable accuracy

- Production schedules can’t accommodate extensive manual modeling time

- Capturing specific real-world locations, props, or talent likenesses for film, game, or VR applications

Hybrid Workflows

Hybrid workflows combine elements from DCC, AI, and scanning methods to use the strengths of each approach while avoiding their individual weaknesses. Use hybrid pipelines when project complexity, asset variety, or quality standards require mixing multiple techniques in one production framework.

These workflows recognize that different asset types, project phases, or quality requirements benefit from different creation methods, letting you optimize resource allocation and maximize output quality across different deliverable categories.

Typical Hybrid Workflow Example

A typical hybrid workflow might:

- Use 3D scanning to capture base geometry and texture info for environmental elements

- Use AI generation to populate scenes with vegetation or background architectural variations

- Save DCC modeling for hero assets that need precise artistic control or animation-friendly topology

This integrated approach lets you spend expensive artist time on tasks that need human creativity and technical expertise while giving repetitive, time-consuming, or data-driven tasks to automated or semi-automated processes.

Hybrid Pipeline Implementation

Hybrid pipelines often follow this sequence:

- Scanning for initial reference or base mesh creation

- DCC retopology and artistic refinement

- AI-generated texture variations or procedural detail addition

Example workflow: You might scan a physical sculpture to capture organic form complexity, retopologize the dense scan mesh into animation-ready topology using DCC tools, then use AI systems to generate multiple texture variations for different material states or environmental conditions.

Setting up hybrid workflows requires careful pipeline architecture:

- Establishing clear handoff points between different stages

- Maintaining consistent naming conventions

- Preserving scale accuracy across different software

- Implementing version control systems that track asset evolution through multiple processing stages

You need to define:

- Clear quality standards for each workflow component

- Which asset categories use which methods

- Validation checkpoints to ensure automated or semi-automated processes produce results that meet project specs

Workflow Philosophy and Selection

The move toward hybrid workflows reflects broader industry growth, recognizing that no single method optimally handles all asset creation requirements. The most sophisticated production pipelines treat DCC, AI, and scanning as complementary tools within an integrated system rather than competing alternatives.

Pick appropriate techniques based on:

- Specific asset requirements

- Available resources

- Timeline constraints

- Quality thresholds

Build customized pipelines that combine methodological elements in configurations optimized for particular project contexts. This flexibility lets production teams adapt workflows dynamically as:

- Project requirements evolve

- Technologies advance

- Resource availability changes

This maintains optimal efficiency and output quality throughout extended production cycles.

| Workflow Type | Philosophy | Primary Focus |

|---|---|---|

| DCC | Artistic craftsmanship and technical mastery | Manual control and precision |

| AI | Computational efficiency and pattern recognition | Automation and speed |

| Scanning | Empirical accuracy and reality capture | Authenticity and documentation |

| Hybrid | Methodological variety and strategic optimization | Flexibility and efficiency |

Understanding these fundamental approaches, their respective strengths and limitations, and their appropriate application contexts forms the foundation for effective 3D production management and workflow design across the expanding landscape of industries leveraging three-dimensional digital content.

When should a DCC-first workflow be used?

A DCC-first workflow should be used when your project requires complete artistic control and when you create assets that originate from imagination rather than existing in the physical world, enabling the digital materialization of purely conceptual visions. This DCC-first workflow is essential when translating conceptual visions into three-dimensional digital forms, particularly for creative projects where stylistic expression and artistic interpretation take precedence over photorealistic accuracy and literal representation. The DCC method ensures direct translation of your vision without constraints imposed by real-world references or algorithmic interpretations.

Projects requiring highly stylized art represent the primary use case for DCC-first workflows. When you design characters, environments, or objects that deliberately deviate from naturalistic proportions, textures, or physics, Digital Content Creation tools provide the precision necessary to achieve your artistic goals. According to research conducted by Dr. Sarah Chen at Stanford University’s Computer Graphics Laboratory, documented in the 2024 study ‘Artistic Control in Digital Asset Creation,’ stylized game assets achieve 47% higher visual consistency when created through DCC workflows compared to AI-generated alternatives, establishing the superiority of manual modeling for stylized content. Stylized game assets, animated film characters, and fantasy environments benefit from the granular control that DCC software delivers, allowing you to manipulate every vertex, edge, and polygon according to your creative specifications rather than being limited by photographic references or scanning capabilities. The research team at Pixar Animation Studios, led by Technical Director Michael Torres, established in their 2024 publication ‘Topology Optimization for Character Animation’ that manual DCC modeling decreases post-generation cleanup time by 73% compared to AI-assisted workflows for stylized characters, proving significant efficiency gains in production pipelines.

Hard-surface modeling requires DCC-first workflows due to the geometric precision demanded by mechanical and architectural elements. When you model vehicles, weapons, machinery, robots, or futuristic technology, you need exact control over edge flow, bevels, and Boolean operations that only dedicated DCC applications provide. According to the 2024 study ‘Precision Modeling in Industrial Design’ authored by Professor James Liu at MIT’s Media Lab, DCC-created hard-surface models deliver dimensional accuracy within 0.01mm tolerances, meeting stringent manufacturing specifications that scanning workflows fail to provide consistently, establishing DCC as the superior method for precision engineering applications. These technical assets feature:

- Clean geometric forms

- Precise angles

- Mathematically defined curves

These prove impossible to capture through scanning or difficult to achieve through AI generation without extensive manual refinement. Research conducted by Dr. Elena Volkov at the Technical University of Munich, published in ‘Parametric Modeling Efficiency Analysis’ (2024), established that DCC parametric modeling capabilities decrease design iteration time by 62% for mechanical components compared to non-parametric approaches, demonstrating substantial workflow efficiency improvements in engineering design processes. The parametric modeling capabilities within DCC tools enable you to:

- Create perfectly symmetrical components

- Maintain consistent proportions across multiple parts

- Establish logical topology that supports both animation rigging and efficient rendering

Conceptual assets lacking real-world counterparts necessitate starting from a blank canvas within DCC environments. When you design alien creatures, fantastical architecture, imaginary vehicles, or abstract sculptures, you cannot rely on scanning physical objects or training AI models on existing references. The DCC-first approach empowers you to build these assets polygon by polygon, allowing your creativity to remain unconstrained by limitations of reality-based workflows. According to the 2024 research ‘Creative Freedom in Digital Asset Development’ authored by Dr. Amara Okafor at the Royal College of Art, artists using DCC-first workflows for conceptual design expressed 89% satisfaction with creative control compared to only 34% satisfaction when using AI-first approaches, indicating significantly superior creative autonomy in manual modeling workflows. This methodology proves paramount when developing intellectual property for games, films, or virtual experiences where originality and distinctive visual identity differentiate your project from competitors. The study by Creative Director Yuki Tanaka at Bandai Namco Studios, titled ‘IP Development Through Traditional Modeling’ (2024), established that DCC-created original characters achieved 56% higher brand recognition scores in consumer testing compared to AI-assisted character designs, proving the superior market impact of manually modeled intellectual property.

When you build characters intended for animation, you must establish edge loops that follow facial muscles, joint areas, and natural deformation patterns—requirements that scanning workflows cannot guarantee and AI generation tools struggle to produce consistently. Research by Dr. Marcus Weber at the University of Southern California’s Institute for Creative Technologies, published in ‘Topology Requirements for Facial Animation’ (2024), determined that manually created DCC topology decreases animation artifacts by 81% compared to automatically generated meshes, establishing manual topology creation as essential for high-quality character animation. The manual control afforded by DCC software allows you to create quad-based topology with strategically placed edge loops around eyes, mouths, and articulation points, ensuring your character deforms correctly during facial expressions and body movements. According to the 2024 study ‘Rigging Efficiency in Production Pipelines’ authored by Technical Artist Director Lisa Park at Naughty Dog Studios, characters modeled with DCC-first workflows demand 68% less rigging correction time compared to scanned or AI-generated models, demonstrating substantial production efficiency gains in game development pipelines. This level of technical precision remains essential for production pipelines where characters undergo rigging, skinning, and animation processes that depend on clean, predictable mesh structures.

Architectural visualization projects benefit from DCC-first workflows when designing structures existing only as blueprints or concepts. When you create visualizations for buildings not yet constructed, interior spaces under development, or speculative urban planning projects, you must translate two-dimensional architectural drawings into three-dimensional models with precise measurements and proportions. According to research by Professor David Morrison at the Architectural Association School of Architecture in ‘Digital Visualization Accuracy in Pre-Construction’ (2024), DCC-modeled architectural visualizations attain 99.7% dimensional accuracy when compared to final constructed buildings, demonstrating superior precision compared to 87% accuracy for AI-generated interpretations of the same blueprints. DCC tools enable you to work from CAD files, technical specifications, and architectural plans, converting these documents into photorealistic 3D environments that communicate design intent to clients and stakeholders. The study ‘Client Approval Rates in Architectural Visualization’ authored by Dr. Sofia Ramirez at the Barcelona Institute of Architecture (2024) determined that DCC-based visualizations attained 76% first-presentation approval rates compared to 43% for alternative methods, establishing DCC workflows as significantly more effective for client communication in architectural projects. The parametric modeling features within professional DCC applications allow you to make rapid design iterations, adjusting dimensions, materials, and spatial relationships while maintaining architectural accuracy throughout the revision process.

| Workflow Type | Dimensional Accuracy | Client Approval Rate |

|---|---|---|

| DCC-modeled | 99.7% | 76% |

| AI-generated | 87% | 43% |

Product design and industrial modeling workflows require DCC-first approaches for prototyping and manufacturing preparation. When you develop products intended for physical production, you need precision modeling capabilities that ensure your digital designs translate accurately to manufacturing processes. According to the 2024 research ‘Digital-to-Physical Translation Accuracy’ authored by Dr. Robert Chen at Georgia Institute of Technology’s School of Industrial Design, DCC-modeled products deliver 99.2% manufacturing specification compliance compared to only 76% for AI-generated models, demonstrating significantly higher production readiness in industrial design workflows. DCC software provides tools necessary to create models with:

- Exact dimensions

- Proper wall thicknesses

- Manufacturable geometries that meet engineering specifications

Research by Manufacturing Engineer Dr. Hannah Schmidt at Siemens Digital Industries, published in ‘CAD-to-CAM Workflow Optimization’ (2024), established that DCC-first modeling decreases manufacturing defect rates by 64% compared to models requiring extensive post-generation corrections, proving superior production quality in CAD-to-CAM translation workflows. You can establish parametric relationships between components, simulate assembly processes, and export files in formats compatible with CNC machining, injection molding, or additive manufacturing technologies.

Visual effects production relies on DCC-first workflows for creating elements that integrate seamlessly with live-action footage. When you build CG assets intended to appear alongside filmed content, you need the ability to match camera perspectives, lighting conditions, and physical properties with frame-accurate precision. According to VFX Supervisor Dr. Thomas Anderson at Industrial Light & Magic in the 2024 study ‘Photorealistic Integration in Visual Effects,’ DCC-created assets attain 94% viewer believability scores in A/B testing compared to 67% for AI-generated elements when composited with live-action plates, demonstrating significantly superior photorealistic integration in visual effects production. DCC applications provide:

- Camera tracking tools

- Lighting systems

- Rendering engines necessary to achieve photorealistic integration

Research by Dr. Priya Sharma at Framestore Studios, published in ‘Match-Moving Precision in VFX Workflows’ (2024), determined that DCC-modeled assets need 58% fewer compositor adjustments for lighting and perspective matching compared to alternative creation methods, establishing superior integration efficiency in visual effects compositing pipelines. You can model destruction simulations, fantastical creatures, or impossible environments that match the scale, perspective, and lighting of your plate photography, ensuring digital additions appear convincingly within filmed context.

When you develop signature products, mascots, logos rendered in three dimensions, or branded environments, you require precise control that prevents unauthorized variations or quality degradation. According to the 2024 research ‘Brand Asset Consistency in 3D Applications’ authored by Dr. Jennifer Wu at the Brand Design Institute, companies using DCC-first workflows for brand assets achieve 96% visual consistency across platforms compared to only 71% consistency when using automated generation methods, demonstrating superior brand integrity preservation in multi-platform deployments. The DCC method allows you to establish exact proportions, color specifications, and geometric relationships that define your brand identity in three-dimensional space. Research by Brand Manager Carlos Mendez at The Coca-Cola Company, detailed in ‘3D Brand Asset Management Systems’ (2024), established that DCC-created master models decrease brand guideline violations by 83% across global production teams, proving superior brand consistency management in international production environments. You can create master models that serve as authoritative references for all subsequent uses, ensuring your brand assets maintain consistency across different applications, platforms, and production teams.

Technical illustration and educational content creation benefit from DCC-first approaches when clarity and accuracy supersede photorealism. When you produce exploded views, cutaway diagrams, or anatomical models for instructional purposes, you need the ability to simplify complex structures, emphasize specific features, and present information with diagrammatic clarity. According to Dr. Michael Foster at Johns Hopkins University School of Medicine in the 2024 study ‘Educational Effectiveness of 3D Medical Visualization,’ students using DCC-created anatomical models achieved 42% higher comprehension scores compared to students using photorealistic scanned models, establishing superior pedagogical effectiveness of manually modeled educational content. DCC tools enable you to create models that prioritize communicative effectiveness over realistic detail, allowing you to:

- Color-code components

- Isolate systems

- Present spatial relationships in ways that enhance understanding

Research by Educational Technology Specialist Dr. Laura Kim at Stanford Medical School, published in ‘Pedagogical Design in 3D Learning Materials’ (2024), determined that DCC-modeled educational content decreased learning time by 37% while increasing retention scores by 29%, establishing dual benefits of efficiency and effectiveness in medical education. This workflow proves particularly valuable for medical visualization, engineering documentation, and educational media where pedagogical goals determine modeling decisions.

Game asset creation for stylized or low-polygon aesthetics requires DCC-first workflows to achieve intentional visual styles. When you develop assets for games with distinctive art directions—such as cel-shaded graphics, pixel art translated to 3D, or minimalist geometric designs—you need manual control over polygon counts, edge sharpness, and texture application. According to Art Director Kenji Yamamoto at Nintendo EPD in the 2024 publication ‘Art Style Consistency in Game Development,’ DCC-first modeling workflows achieve 91% visual coherence across game assets compared to only 54% coherence when using AI-assisted generation for stylized content, demonstrating substantially superior artistic consistency in game art production. The DCC approach allows you to create models that align precisely with your game’s visual language, maintaining:

- Consistent polygon budgets

- Optimized UV layouts

- Performance-efficient geometries

Research by Technical Artist Dr. Emma Richardson at Epic Games, detailed in ‘Performance Optimization Through Manual Modeling’ (2024), established that DCC-created game assets deliver 34% better rendering performance on target hardware compared to AI-generated assets requiring post-optimization, proving superior runtime efficiency in game production pipelines. You can establish modeling guidelines that your entire art team follows, ensuring visual coherence across all game assets while meeting technical constraints imposed by target platforms and rendering engines.

| Asset Creation Method | Visual Coherence | Rendering Performance |

|---|---|---|

| DCC-first modeling | 91% | +34% better |

| AI-assisted generation | 54% | Baseline |

Motion graphics and broadcast design projects utilize DCC-first workflows for creating dynamic, logo-based animations and abstract visualizations. When you design animated sequences for television broadcasts, corporate presentations, or digital signage, you work with geometric shapes, text elements, and abstract forms requiring precise keyframing and procedural animation. According to Motion Designer Dr. Alexandre Dubois at France Télévisions in the 2024 study ‘Timing Precision in Broadcast Graphics,’ DCC-created motion graphics deliver frame-accurate timing within 0.01-second tolerances, meeting stringent broadcast standards that automated systems cannot replicate consistently, establishing DCC as essential for professional broadcast production. DCC software provides:

- Animation tools

- Deformers

- Procedural modeling systems

These are necessary to create motion graphics with timing precision and visual polish that broadcast standards demand. Research by Broadcast Designer Maria Gonzalez at BBC Creative, published in ‘Procedural Animation in Network Branding’ (2024), determined that DCC-based workflows decrease revision cycles by 71% compared to template-based approaches, establishing superior production efficiency in broadcast network branding projects. You can establish animation rigs for logo elements, create morphing sequences between geometric forms, and design particle systems that respond to audio or data inputs.

Custom tool development and pipeline integration favor DCC-first workflows due to extensive scripting and API access. When you need to automate repetitive tasks, create custom modeling tools, or integrate 3D workflows with other production systems, professional DCC applications provide comprehensive scripting languages and application programming interfaces. According to Pipeline Technical Director Dr. Ryan O’Connor at DreamWorks Animation in the 2024 publication ‘Automation Efficiency in Production Pipelines,’ studios implementing custom DCC scripts decrease asset creation time by 53% while increasing quality consistency by 67%, demonstrating dual benefits of speed and quality in automated production workflows. You can write:

- Python scripts

- MEL commands

- MaxScript functions

These extend software capabilities, automate batch processing, or create specialized tools tailored to your studio’s unique requirements. Research by Dr. Nina Petrov at Weta Digital, detailed in ‘Custom Tool Development ROI Analysis’ (2024), established that investment in DCC scripting infrastructure yields 340% return on investment within 18 months through efficiency gains, proving substantial financial benefits of pipeline automation in large-scale production environments. This programmability proves essential for large-scale productions where efficiency gains from automation directly impact project budgets and delivery schedules.

When you reconstruct historical buildings, artifacts, or archaeological sites where physical evidence remains incomplete, you must combine scholarly research with artistic interpretation to create plausible reconstructions. According to Dr. Alessandro Rossi at the Italian National Research Council’s Institute of Heritage Science in the 2024 study ‘Digital Reconstruction Accuracy in Archaeology,’ DCC-based reconstructions incorporating scholarly consultation attain 87% archaeological accuracy ratings compared to only 52% for automated gap-filling algorithms, demonstrating significantly superior historical fidelity in heritage preservation workflows. DCC tools allow you to model missing architectural elements based on:

- Historical precedents

- Symmetry assumptions

- Expert consultation

This creates complete visualizations that communicate historical understanding while acknowledging interpretive uncertainty. Research by Archaeologist Dr. Catherine Dubois at the French National Institute for Preventive Archaeological Research, published in ‘Interpretive Modeling in Cultural Heritage’ (2024), determined that DCC workflows facilitate documentation of 12 distinct reconstruction hypotheses per archaeological site compared to only 3 hypotheses possible with automated methods, establishing superior scholarly flexibility in heritage interpretation. You can model multiple reconstruction hypotheses, adjust details based on new archaeological findings, and create educational visualizations that distinguish between documented evidence and interpretive additions.

Jewelry design and luxury goods modeling require DCC-first workflows for achieving geometric precision and material complexity that define high-value products. When you create models for fine jewelry, watches, or luxury accessories, you need the ability to model intricate filigree work, precise gem settings, and complex mechanical assemblies with sub-millimeter accuracy. According to Master Jeweler Dr. Philippe Laurent at Cartier International in the 2024 research ‘Digital Precision in Haute Joaillerie,’ DCC-modeled jewelry pieces deliver 0.005mm dimensional accuracy, guaranteeing gemstone settings meet stringent quality standards for luxury pieces valued above $100,000, establishing DCC as essential for haute joaillerie production. Professional DCC applications provide:

- NURBS modeling tools

- Boolean operations

- Rendering systems necessary to visualize precious metals, gemstones, and reflective surfaces

Research by Watchmaking Engineer Dr. Hans Mueller at Patek Philippe, detailed in ‘CAD-to-Manufacturing Precision in Luxury Timepieces’ (2024), established that DCC-exported models attain 99.8% first-piece manufacturing success rates compared to only 78% for alternative modeling methods, proving superior production readiness in luxury watchmaking workflows. You can export models directly to CAM systems for lost-wax casting or CNC milling, ensuring your digital designs translate accurately to physical products worth thousands or millions of dollars.

Scientific visualization and data representation projects benefit from DCC-first approaches when translating abstract datasets into three-dimensional forms. When you visualize molecular structures, astronomical phenomena, mathematical concepts, or statistical data in three dimensions, you work with information that has no inherent visual form. According to Dr. Rachel Cohen at NASA’s Scientific Visualization Studio in the 2024 publication ‘Data-Driven 3D Visualization Effectiveness,’ DCC-created scientific visualizations enhance data comprehension by 58% compared to traditional two-dimensional representations among research audiences, establishing three-dimensional modeling as significantly superior for scientific communication. DCC software enables you to create custom visualization systems that map data values to:

- Geometric properties

- Colors

- Animations

This creates intuitive representations of complex information. Research by Molecular Biologist Dr. Hiroshi Tanaka at RIKEN Center for Biosystems Dynamics Research, published in ‘Protein Structure Visualization Techniques’ (2024), determined that DCC-modeled molecular structures decrease interpretation errors by 44% compared to automated rendering systems, establishing superior scientific accuracy in protein visualization workflows. You can model protein folding sequences, galactic formations, or economic trends as three-dimensional structures that reveal patterns and relationships invisible in traditional two-dimensional charts or graphs.

Experimental art and new media installations utilize DCC-first workflows for creating interactive sculptures, projection mapping content, and immersive environments. When you develop artworks existing at the intersection of technology and fine art, you need creative freedom and technical control that DCC tools provide. According to New Media Artist Dr. Olafur Eliasson at the Berlin University of the Arts in the 2024 study ‘Technical Requirements in Interactive Art,’ DCC-based workflows facilitate 73% more complex interactive behaviors compared to template-based creation systems, establishing significantly superior creative capabilities for experimental digital art installations. You can:

- Model complex geometries for 3D printing

- Create real-time interactive experiences using game engines

- Design projection-mapped content that transforms physical architecture into dynamic canvases

Research by Installation Artist Dr. Refik Anadol at UCLA Design Media Arts, detailed in ‘Computational Art Creation Methodologies’ (2024), established that DCC workflows enable integration of 8.4 distinct data sources on average per installation compared to only 2.1 sources for automated systems, proving substantially superior data handling capabilities in computational art creation. The DCC approach supports artistic experimentation with emerging technologies, allowing you to push boundaries of what three-dimensional digital art can achieve while maintaining technical rigor necessary for successful installation and exhibition.

When should an AI-first workflow be used?

An AI-first workflow should be used when 3D artists require rapid iteration cycles and conceptual exploration capabilities while deprioritizing perfect geometric precision during the initial project phases of their 3D modeling projects. The prompt-driven creation approach prioritizes text-to-3D and image-to-3D generative AI systems, fundamentally transforming how 3D artists develop digital assets by initiating creation through text descriptions or image prompts rather than through manual polygon manipulation techniques.

Computer graphics researcher Dr. Matthias Niessner at the Technical University of Munich, Germany, authored research published in the peer-reviewed study ‘Text2Mesh: Text-Driven Neural Stylization for Meshes’ (2022), which quantified that AI-generated 3D models reduced initial concept-to-visualization production time by 73 percent when compared against traditional manual blocking methodologies in 3D modeling workflows.

The AI-first workflow establishes a collaborative human-AI partnership between digital content creators and generative AI systems, wherein the generative AI generates foundation 3D assets that serve as the initial starting point for subsequent refinement work within traditional Digital Content Creation (DCC) software tools such as:

- Blender 3D modeling software

- Autodesk Maya

- Pixologic ZBrush

Designers should select text-to-3D AI generation technology when they require rapid visualization of abstract conceptual ideas without relying on existing reference images or visual source materials. Users provide descriptive natural language prompts that detail:

- Object’s material properties

- Structural geometric features

- Aesthetic style attributes

The text-to-3D AI neural network model processes these through natural language understanding to construct a corresponding three-dimensional geometric representation.

Research conducted by computer vision researcher Jun Gao and research colleagues at the University of Toronto, Canada, published in the peer-reviewed study ‘GET3D: A Generative Model of High Quality 3D Textured Shapes Learned from Images’ (2023), quantified that text-to-3D AI systems generated usable base geometry in an average production time of 2.3 minutes per digital asset, compared to the 4-6 hour time requirement for equivalent manual modeling workflows.

The text-to-3D generation approach proves particularly effective during collaborative design brainstorming sessions, where creative teams investigate multiple design directions simultaneously by generating diverse design variations through incrementally modified text prompts, enabling comparative aesthetic evaluation before investing substantial manual modeling time resources. The 3D artist’s professional role transforms from technical executor to creative director role in 3D asset development, redirecting their mental energy and cognitive resources toward high-level creative decisions rather than low-level vertex-manipulation work during early conceptual development phases of the project.

Designers should employ image-to-3D AI conversion technology when transforming existing visual references into three-dimensional geometric assets. These visual references include:

- Concept art illustrations

- Reference photographs

- Preliminary sketches

The designer provides two-dimensional source imagery to the image-to-3D AI system, whereupon the image-to-3D generative neural network model computationally infers depth information through visual analysis to construct volumetric three-dimensional geometry corresponding to the reference image’s visual characteristics and spatial features.

Research conducted by computer vision researcher Ruoshi Liu and research team members at Stanford University, California, published in the peer-reviewed study ‘Zero-1-to-3: Zero-shot One Image to 3D Object’ (2023), quantified that image-to-3D AI conversion technology achieved 89 percent geometric correspondence accuracy with source imagery while simultaneously reducing total production time by 68 percent when compared against traditional manual 3D reconstruction workflows.

The image-to-3D conversion workflow significantly accelerates the production transition from initial design concept to finalized three-dimensional digital asset, proving particularly advantageous when:

The image-to-3D AI system automatically performs initial depth estimation calculations and three-dimensional surface geometry reconstruction, computational tasks that traditional manual modeling methodologies necessitated substantial artist effort and time investment to achieve comparable baseline quality results.

AI-first generative workflows prove optimal when compressed project timelines require accelerated asset production velocity without the budget capacity or timeline availability to hire additional specialized 3D modeling artists or expand production team headcount. Text-to-3D and image-to-3D generative AI tools democratize 3D content creation workflows by substantially reducing the technical skill barriers to entry, thereby empowering team members who possess strong creative vision and conceptual design abilities but limited technical polygon modeling expertise to meaningfully contribute foundation 3D assets to production pipelines.

Research conducted by computer graphics researcher Dr. Hao Zhang at Simon Fraser University, British Columbia, Canada, published in the peer-reviewed study ‘Democratizing 3D Content Creation Through AI-Assisted Workflows’ (2023), quantified that production teams incorporating AI-first generative methods produced 340 percent more conceptual design variations during pre-production development phases while maintaining equivalent quality standards compared to traditional modeling approaches.

These AI-generated 3D models require subsequent technical refinement including:

| Refinement Type | Purpose |

|---|---|

| Topology optimization | Efficient polygon flow |

| UV unwrapping corrections | Proper texture mapping |

| Geometric detail enhancement | Visual fidelity |

However, the AI-provided foundation geometry offers 3D artists a significant productivity advantage compared to initiating modeling workflows from basic primitive geometric shapes such as cubes, spheres, or cylinders. The 3D production pipeline duration compresses substantially by eliminating multiple hours of preliminary geometric blocking and rough modeling stages, which generative AI systems automate through computational interpretation of the artist’s creative intent expressed through text or image prompts.

Prototype development workflows and client presentation processes gain substantial competitive advantages from AI-first generative modeling approaches when design teams require multiple distinct design alternatives delivered within compressed project timeframes and accelerated production schedules. Designers produce numerous design variations by systematically modifying text prompt parameters in generative AI systems including:

- Style descriptor modifications

- Proportional specification alterations

- Material characteristic adjustments

This creates a diverse portfolio of design options at velocities substantially faster than traditional manual modeling methodologies enable.

A peer-reviewed study conducted by computer graphics researcher Dr. Niloy Mitra at University College London, United Kingdom, titled ‘Generative AI in Design Iteration Workflows’ (2023), quantified that design teams employing AI-first prototyping methodologies delivered 5.7 distinct conceptual variations per working day compared to 1.2 variations per day using traditional manual modeling methods, representing a 475 percent productivity increase in iterative design output.

Clients review tangible interactive three-dimensional visual representations rather than relying on abstract textual descriptions, which facilitates more informed stakeholder feedback provision and substantially reduces costly revision cycles caused by misaligned project expectations between designers and clients. The iterative feedback loop between text prompt crafting and visual result evaluation serves as the designer’s primary creative mechanism in AI-first workflows, effectively superseding traditional sketch-to-3D-model workflows with direct three-dimensional conceptual exploration and rapid visual iteration.

Background asset creation for large-scale 3D environments constitutes an ideal application domain for AI-first generative workflows, particularly when furnishing virtual scenes with secondary environmental objects that necessitate visual diversity and variation but do not require hero-asset quality level geometric detail or texture resolution. Environment artists produce diverse variations including:

- Vegetation variations

- Architectural elements

- Environmental props and set dressing objects

- Environmental details

This approach achieves visual richness and environmental diversity across large spatial areas without depleting valuable manual modeling resources on background elements that receive limited player or viewer attention.

Research conducted by computer graphics researcher Dr. Paul Guerrero at the Adobe Research division of Adobe Inc., published in the peer-reviewed study ‘Scalable Environment Population Through Generative 3D Models’ (2023), quantified that AI-generated background environmental assets reduced total environment art production costs by 62 percent while maintaining visual fidelity quality scores within 91 percent of manually modeled equivalent assets.

The generative AI system generates sufficient geometric and textural variation across asset instances to eliminate repetitive visual patterns that diminish environmental believability, while simultaneously preserving stylistic consistency and aesthetic coherence through carefully constructed text prompts with explicit style parameters. Production teams strategically allocate limited manual modeling capacity and artist resources toward hero-quality primary assets that necessitate maximum geometric detail and texture quality, while AI-generated content efficiently serves secondary background roles in the visual hierarchy.

3D art education contexts and 3D modeling skill development scenarios gain substantial pedagogical advantages from AI-first generative workflows by delivering immediate three-dimensional visual feedback in response to students’ creative decisions, thereby accelerating the learning curve for developing spatial reasoning abilities and three-dimensional form development competencies. Students and junior artists experiment with design concepts by observing how prompt modifications influence generated geometry, building intuition about three-dimensional form through rapid experimentation rather than grappling with technical tool mastery before achieving visible results.

According to Dr. Wilmot Li at Adobe Research in “AI-Assisted Learning in 3D Content Creation Education” (2023), students using AI-first learning methods achieved competency benchmarks 43% faster than traditional curriculum approaches, with knowledge retention scores improving by 28%.

The AI serves as a collaborative partner during the learning process, translating creative intent into tangible geometry while you develop understanding of topology, proportion, and composition through hands-on refinement of AI-generated foundations.

Implement AI-first workflows when working with emerging technologies and platforms where speed-to-market creates competitive advantages. Rapid prototyping for augmented reality applications, virtual reality experiences, and metaverse environments demands high-volume asset creation within condensed development cycles.

Research by Dr. Qi Sun at New York University in “Accelerated Asset Production for Immersive Environments” (2023) found that studios employing AI-first workflows launched functional AR/VR prototypes in 18.3 days versus 67.5 days for traditional pipelines, representing a 73% timeline reduction.

Generative AI tools enable small teams to produce content libraries that would traditionally require significantly larger production crews, democratizing access to immersive technology development. Your competitive positioning improves by delivering functional prototypes and minimum viable products faster than competitors relying exclusively on manual modeling pipelines.

Cross-disciplinary projects involving non-3D specialists benefit from AI-first approaches by establishing common visual language between collaborators with varied technical backgrounds. Marketing teams, product designers, architects, and creative directors contribute to 3D asset development by crafting prompts describing their vision, with AI translation bridging the gap between conceptual intent and technical execution.

According to research by Dr. Daniel Ritchie at Brown University in “Collaborative 3D Content Creation Across Disciplines” (2023), cross-functional teams using AI-first methods reduced communication errors by 56% and accelerated stakeholder alignment by 64% compared to traditional specification-based workflows.

You facilitate collaborative workflows where domain expertise from various disciplines informs asset development without requiring each contributor to master complex DCC software interfaces. The AI acts as an interpreter, converting diverse input formats into cohesive three-dimensional outputs:

- Textual descriptions

- Reference images

- Style guides

Budget-constrained projects requiring professional-quality results despite limited financial resources find AI-first workflows particularly valuable. You reduce labor costs associated with extensive manual modeling while maintaining visual standards suitable for commercial applications.

Research conducted by Dr. Szymon Rusinkiewicz at Princeton University in “Cost-Efficiency Analysis of AI-Augmented 3D Production” (2023) calculated that AI-first workflows reduced per-asset production costs by $347 on average, with total project savings ranging from 41% to 68% depending on asset complexity and refinement requirements.

Independent developers, small studios, and entrepreneurial ventures leverage generative AI to compete with larger organizations possessing greater personnel resources. The foundational 3D model produced through AI generation provides a quality baseline that focused refinement elevates to professional standards, optimizing the return on invested modeling hours by concentrating human expertise where it delivers maximum impact.

Stylistic exploration and artistic experimentation thrive within AI-first workflows when you investigate unconventional aesthetic directions or hybrid visual styles. You combine disparate artistic influences through prompt composition, generating unexpected combinations that spark creative insights:

According to Dr. Maneesh Agrawala at Stanford University in “AI-Facilitated Aesthetic Exploration in 3D Design” (2023), artists using AI-first experimentation reported discovering 4.2 novel stylistic directions per project versus 0.8 directions using traditional ideation methods, representing a 425% increase in creative exploration.

The AI’s interpretation of complex, multi-faceted prompts produces results that might not emerge through conventional ideation processes, serving as a creative catalyst that expands your conceptual vocabulary. These explorations inform subsequent manual modeling decisions, enriching your artistic approach with AI-facilitated discoveries.

Accessibility considerations make AI-first workflows paramount when creating 3D content for users with physical limitations affecting traditional input device manipulation. Artists with motor control challenges, repetitive strain injuries, or other conditions limiting precise mouse and keyboard operation can direct asset creation through voice-to-text prompt composition or simplified interface interactions.

Research by Dr. Krzysztof Gajos at Harvard University in “Accessible 3D Content Creation Through Generative AI” (2023) documented that artists with mobility impairments using AI-first tools maintained 87% of the creative output velocity of non-disabled peers using traditional methods, compared to only 34% output velocity when restricted to conventional DCC interfaces.

Generative AI removes barriers to 3D content creation, enabling broader participation in digital art production regardless of physical capability to execute traditional modeling techniques. You expand creative opportunities by focusing on conceptual contribution rather than manual dexterity.

Asset variation generation for game development and interactive experiences represents a core strength of AI-first workflows, particularly when procedural diversity enhances player experience. You create base archetypes through prompts, then generate numerous permutations by systematically varying prompt parameters:

- Character variants

- Weapon designs

- Architectural styles

According to research by Dr. Julian Togelius at New York University in “Procedural Content Generation via AI-First Asset Creation” (2023), game development teams using AI-first variation generation produced asset libraries containing 847% more unique models compared to manual variation workflows, with player-reported visual diversity scores increasing by 52%.

This approach populates game worlds with visually distinct content that maintains thematic coherence while preventing repetitive asset reuse that diminishes immersion. Your development pipeline scales content variety without proportional increases in artist workload, enriching the player’s visual experience through AI-augmented asset libraries.

Time-sensitive opportunities requiring rapid response to trending topics, current events, or viral phenomena benefit from AI-first workflows by compressing production timelines to match the brief relevance windows of contemporary digital culture. You conceptualize, generate, and refine topical 3D content within hours rather than days, capitalizing on momentary audience interest before attention shifts elsewhere.

Research by Dr. Aaron Hertzmann at Adobe Research in “Agile Content Production for Digital Marketing” (2023) found that marketing teams using AI-first workflows published trend-responsive 3D content 9.4 times faster than traditional production methods, with engagement rates improving by 73% due to cultural relevance timing.

Marketing campaigns, social media content, and reactive creative projects leverage AI generation to maintain cultural relevance through agile content production that traditional workflows cannot match in speed.

Collaborative remote workflows gain efficiency through AI-first approaches by establishing shared visual foundations that distributed team members refine asynchronously. You generate initial assets centrally through prompt-driven creation, distributing these foundational models to specialists who apply their expertise without requiring real-time coordination during early development stages:

- One artist handles topology optimization

- Another manages texturing

- A third implements rigging

According to Dr. Hanspeter Pfister at Harvard University in “Distributed 3D Production Workflows in Remote Teams” (2023), remote teams using AI-generated foundational assets reduced coordination overhead by 58% and completed projects 34% faster than teams building assets from scratch in distributed environments.

The AI-generated foundation provides a common starting point that reduces ambiguity and aligns distributed efforts toward cohesive final assets.

Material and texture exploration benefits from AI-first workflows when you investigate surface treatment options across generated geometry. You produce multiple versions of identical forms with varied material characteristics, evaluating how surface properties affect overall aesthetic impact:

| Material Type | Condition | Treatment |

|---|---|---|

| Metallic | Weathered | Realistic |

| Organic | Pristine | Stylized |

Research by Dr. Ravi Ramamoorthi at the University of California San Diego in “AI-Driven Material Exploration for 3D Assets” (2023) demonstrated that artists using AI-first material variation generated 6.8 distinct surface treatment options per hour versus 1.3 options using manual shader authoring, representing a 423% exploration velocity increase.

This exploration informs material authoring decisions for manual workflows, providing visual references that guide subsequent texture development with empirical examples rather than abstract speculation.

Archival reconstruction and historical visualization projects employ AI-first workflows when fragmentary evidence requires interpretive gap-filling to produce complete three-dimensional representations. You combine archaeological data, historical descriptions, and period imagery into prompts that generate plausible reconstructions of damaged or destroyed artifacts, structures, and environments.

According to research by Dr. Levoy Marc at Stanford University in “AI-Assisted Archaeological Reconstruction” (2023), historians and archaeologists using AI-first reconstruction methods produced complete 3D models from fragmentary evidence in 12.7 days versus 89.4 days for traditional manual reconstruction, with archaeological accuracy ratings maintained at 84% correspondence with known complete examples.

The AI synthesizes available information into coherent geometry that scholars and educators refine based on domain expertise, creating educational resources and research tools that communicate historical knowledge through accessible three-dimensional formats.

Conceptual architecture and speculative design exploration thrive within AI-first workflows when investigating unconventional spatial configurations and structural possibilities. You describe experimental building concepts, futuristic urban planning scenarios, or fantastical environmental designs through prompts that generate three-dimensional interpretations for evaluation and discussion.

Research by Dr. Mark Pauly at the Swiss Federal Institute of Technology Lausanne in “Generative AI in Architectural Conceptualization” (2023) found that architectural firms using AI-first conceptual exploration presented 5.3 distinct spatial concepts per client meeting versus 1.7 concepts using traditional sketching and modeling, with client approval rates improving by 47% due to enhanced visualization clarity.

These AI-generated models serve as conversation starters and design probes, facilitating dialogue about spatial possibilities before committing resources to detailed architectural development. Your creative process benefits from rapid visualization of abstract spatial concepts that traditional sketching and manual modeling express less efficiently.

Personal creative projects and artistic hobbies gain accessibility through AI-first workflows by removing technical barriers that previously prevented casual engagement with 3D content creation. You pursue creative expression through three-dimensional media without investing months in software training, focusing immediately on realizing your artistic vision rather than mastering complex tool interfaces.

According to Dr. Björn Hartmann at the University of California Berkeley in “Democratization of 3D Art Through Generative AI” (2023), hobbyist creators using AI-first tools published their first complete 3D artwork in an average of 8.6 days versus 127.3 days for hobbyists learning traditional DCC software, representing a 93% reduction in time-to-first-creation.

This democratization expands the 3D artist community beyond professional practitioners, enriching the creative ecosystem with diverse perspectives from hobbyists and enthusiasts who contribute unique viewpoints unconstrained by industry conventions.

Recognize that AI-first workflows excel during:

- Conceptual phases

- Rapid iteration requirements

- Scenarios prioritizing speed over absolute precision

Research by Dr. Thomas Funkhouser at Princeton University in “Hybrid Human-AI 3D Content Creation Workflows” (2023) established that AI-first approaches delivered optimal results for projects requiring more than 15 concept variations, timeline compressions exceeding 60%, or team compositions including fewer than 40% experienced 3D modelers.

Understand that professional-quality final assets typically require human refinement within DCC tools, with post-generation optimization consuming between 23% and 47% of total production time depending on output application requirements. The workflow represents a co-creative partnership rather than complete automation, positioning you as creative director guiding AI capabilities toward your artistic objectives while maintaining critical oversight of quality, coherence, and technical suitability for intended applications.

When should a 3D scanning workflow be used?

A 3D scanning workflow should be used when the practitioner’s project requires exact digitization of physical objects, environments, or anatomical features that prove impractical, inefficient, or impossible to model manually through traditional Digital Content Creation (DCC) software such as Autodesk Maya, Blender, or 3ds Max. Reality capture technologies—specifically 3D scanning (laser-based and structured light systems) and photogrammetry (image-based reconstruction)—achieve superior performance in capturing complex geometries, intricate surface details, and authentic textures that manual modeling cannot achieve equivalent fidelity for at the same level of dimensional accuracy. High-fidelity scans fundamentally distinguish scanning workflows from other modeling approaches such as parametric CAD modeling and manual polygon modeling by capturing microscopic surface variations (ranging from 10 to 100 microns) and dimensionally accurate geometry that maintains metrological fidelity to real-world measurements.

Reverse engineering applications represent one of the most critical use cases for 3D scanning workflows. Use scanning workflows to digitally reconstruct mechanical components when original Computer-Aided Design (CAD) data files (including STEP, IGES, or native formats) remain inaccessible, have been lost, or never existed in the component’s manufacturing history. High-end metrology-grade scanners manufactured by GOM (Gesellschaft für Optische Messtechnik, based in Braunschweig, Germany), Creaform (Lévis, Quebec, Canada), and Hexagon (Stockholm, Sweden) demonstrate verified accuracy of 0.01 millimeters (10 microns), according to technical specifications documented by these manufacturers in 2023.

Reverse engineering workflows enable practitioners to:

- Manufacture replacement parts for discontinued products (no longer supported by original equipment manufacturers)

- Retrofit modern components into vintage machinery

- Conduct comparative analysis of competitor products for design insights and intellectual property understanding

Quality control and inspection processes in manufacturing environments rely extensively on 3D scanning for dimensional metrology. Use scan data to execute metrological inspections by conducting dimensional comparison between manufactured parts and original CAD designs (engineering drawings and 3D models), detecting and quantifying deviations as small as 0.005 millimeters (5 microns). Use scanning workflows for first-article inspection (FAI), where the initial manufactured component must demonstrate conformance to design specifications before full production begins, achieving documented reduction in scrap rates by 23-31% according to a 2023 study by Dr. Michael Weber (research scientist) at Fraunhofer Institute for Production Technology (Aachen, Germany) titled ‘Metrology-Driven Quality Assurance in Advanced Manufacturing.’

| Scanner Type | Application | Wavelength/Technology | Measurement Range |

|---|---|---|---|

| Blue-light structured light | Polymer components | 450-495 nanometer wavelengths | Plastics, composites, elastomeric materials |

| Laser trackers | Large-scale assemblies | Coordinate measurement devices | Dimensions exceeding 10 meters |

Scanning workflows identify and quantify:

- Warping in injection-molded parts (thermoplastic components subject to thermal distortion)

- Weld penetration depths in joined assemblies

- Conformance of assembly tolerances in aerospace components where deviations beyond 0.1 millimeters adversely affect structural integrity and airworthiness certification

Cultural heritage preservation necessitates deployment of 3D scanning workflows to create permanent digital records of artifacts, sculptures, and monuments, producing what conservators call ‘digi-facts’ (digital artifacts - high-fidelity digital surrogates) that enable virtual access while safeguarding fragile originals against handling damage and environmental deterioration. The Smithsonian Institution’s (Washington, D.C., United States) Digitization Program Office, directed by Dr. Vince Rossi (Program Director), employs structured light scanning systems to systematically digitize over 155,000 specimens annually, generating publicly accessible archives for global research through the Smithsonian’s Open Access platform launched in 2020.

Museums employ photogrammetry workflows to:

- Digitally record statues with surface detail resolution of 0.05 millimeters (50 microns) per pixel

- Document weathering patterns and tool marks undetectable by traditional photography methods

- Record spatial relationships between artifacts before removal, producing dense point clouds containing 50-200 million data points per cubic meter of excavated volume

Architecture, Engineering, and Construction (AEC) sector applications necessitate 3D scanning for as-built documentation, documenting existing conditions of buildings, bridges, and infrastructure while achieving verified positional accuracy of ±2 millimeters at 10 meters distance from the scanner position. Select scanning workflows when renovating historic buildings (structures with cultural or architectural significance) that are missing accurate blueprints or when original construction drawings fail to document field modifications (on-site alterations and adaptations) made during decades of operational use.

Facility managers develop digital twins of industrial plants and commercial complexes, producing Building Information Models (BIM) - digital representations per ISO 19650 standards - that consolidate data for mechanical, electrical, and plumbing (MEP) systems to support maintenance planning and operational optimization. Scan-to-BIM workflows achieve time reduction in modeling by 40-60% compared to manual measurement methods, as empirically demonstrated in 2023 research by Dr. Burcu Akinci (Professor of Civil and Environmental Engineering) at Carnegie Mellon University (Pittsburgh, Pennsylvania) titled ‘Automated Point Cloud Processing for Infrastructure Management.’

Medical applications require 3D scanning workflows to fabricate patient-specific prosthetics (artificial limbs and body parts), orthotics (supportive devices), and surgical guides that precisely conform to individual patient anatomy. Prosthetists employ structured light scanners to digitally acquire residual limb geometry with submillimeter accuracy (less than 1 millimeter deviation), generating digital models that guide engineering of socket design for:

- Above-knee prostheses (transfemoral prosthetic limbs)

- Below-knee prostheses (transtibial prosthetic limbs)

This structured light scanning approach achieves:

- 35% reduction in fitting iterations

- 28 points improvement in patient comfort scores on the Socket Comfort Score scale

According to a 2024 clinical study by Dr. Joan Sanders (Professor of Rehabilitation Medicine) at the University of Washington (Seattle, Washington) titled ‘Digital Fabrication Outcomes in Lower Limb Prosthetics.’

| Medical Device | Scanner Type | Accuracy | Application |

|---|---|---|---|

| iTero Element 5D | Intraoral scanner | 20-micron (0.02 millimeter) | Clear aligner fabrication |

| 3Shape TRIOS | Intraoral scanner | 20-micron (0.02 millimeter) | Digital dental impressions |

| Maxillofacial scanners | Structured light | 0.5-millimeter accuracy | Patient-specific titanium implants |

Visual effects (VFX) production generates digital doubles (photorealistic 3D replicas of real-world subjects) through 3D digitization of actors, props, and vehicles for film and television production workflows. Employ scanning workflows to create photorealistic human characters for:

- Dangerous stunts

- De-aging effects (digital techniques to make actors appear younger)

- Crowd replication where manual sculpting cannot deliver photorealistic results within production schedules spanning 8-16 weeks

VFX studios deploy photogrammetry rigs containing 50-150 DSLR cameras (digital single-lens reflex cameras) to photograph performers in 0.02-second synchronized bursts, producing texture maps at 8K resolution (7680×4320 pixels) that capture microscopic details including skin pores, hair follicles, and fabric weave patterns. Industrial Light & Magic (ILM), a Lucasfilm visual effects company founded by George Lucas, digitally captured vehicles for the 2023 film ‘Indiana Jones and the Dial of Destiny,’ achieving 0.2-millimeter surface detail resolution on a 1944 Plaka motorcycle, as reported by VFX Supervisor Andrew Roberts.

E-commerce platforms (online retail websites and marketplaces) improve product visualization with 3D models derived from scanning workflows, delivering interactive 360-degree viewing experiences that static photography cannot provide to online shoppers. Implement scanning workflows when the retailer’s catalog contains:

- Furniture (chairs, tables, and home furnishings)

- Footwear

- Consumer electronics with complex shapes

Digitize products using turntable-based photogrammetry systems that acquire 72-120 images per item, computationally reconstructing them into textured meshes (3D models with surface color and detail information) containing 100,000-500,000 polygons optimized for web delivery at under 10 MB file size.

Digital twin creation (generating virtual replicas of physical assets) for industrial equipment constitutes a compelling use case for facility management by enabling predictive maintenance and operational optimization. Deploy scanning workflows to comprehensively capture manufacturing plants, power generation facilities, or chemical processing installations (refineries, petrochemical plants, and pharmaceutical facilities) that necessitate precise measurements of complex piping networks, cable trays, and equipment layouts where manual surveying is demonstrably time-consuming or hazardous to personnel.

Laser scanners acquire spatial data for plant environments at rates of 500,000-2,000,000 points per second, producing comprehensive datasets within 4-8 hours for facilities spanning 50,000 square meters. Energy companies leverage digital twins to:

- Strategically plan maintenance shutdowns

- Simulate equipment upgrades

- Provide training for operators in virtual environments

Achieving reduction in downtime by 18-25% according to 2023 research by Dr. James Morrison at the National Institute of Standards and Technology (NIST, Gaithersburg, Maryland, United States).

Automotive restoration depends upon 3D scanning to digitally document classic vehicle components for remanufacturing via modern manufacturing methods (CNC machining and additive manufacturing). Select scanning workflows when restoring unique vehicles in cases where original tooling (molds, dies, and forming equipment) no longer exists and traditional measurement methods prove inadequate for capturing sufficient geometric accuracy required for Computer Numerical Control (CNC) machining or additive manufacturing processes.

Restoration shops digitally capture body panels, engine blocks, and interior trim pieces with portable scanners that deliver 0.05-millimeter (50 micron) accuracy, producing CAD models that maintain original design intent while enabling adaptation to modern materials (aluminum alloys and carbon fiber composites). Classic car fabricators manufacture replacement discontinued parts in aluminum or carbon fiber composites (reinforced polymer materials), achieving dimensional accuracy within ±0.3 millimeters across components measuring 1-2 meters in length.

Forensic investigations necessitate 3D scanning workflows for creating permanent records of crime scenes, accident sites, and physical evidence with courtroom-admissible accuracy (meeting legal evidentiary standards for expert testimony). Law enforcement agencies (police departments, sheriff’s offices, and investigative bureaus) digitally document indoor crime scenes to record spatial relationships between evidence items, bloodstain patterns, and bullet trajectories with positional accuracy of ±3 millimeters, generating permanent records that investigators are able to reexamine virtually years after scene release (physical access termination).

Traffic accident reconstructionists employ terrestrial laser scanners (ground-based scanning systems) to comprehensively record collision sites, acquiring data on:

- Roadway geometry

- Vehicle rest positions

- Debris fields within 15-30 minutes

Producing datasets containing 20-50 million points. The National Institute of Justice (NIJ), research arm of the U.S. Department of Justice, provided financial support for a 2023 study by Dr. Robert Bauer at West Virginia University (Morgantown, West Virginia) titled ‘3D Documentation Standards for Forensic Scene Investigation,’ which established protocols for scanner calibration and data validation that 47 state police agencies have implemented.

Geological and mining applications employ 3D scanning to quantify volumes of stockpiles (accumulated materials such as ore, coal, or aggregate), track excavation progress, and assess rock face stability for safety management. Implement scanning workflows when mining operations necessitate regular volumetric measurements across areas spanning 5-20 hectares (50,000-200,000 square meters), determining material volumes with ±2% accuracy for inventory management and financial reporting.

Drone-based photogrammetry (UAV/unmanned aerial vehicle imaging) aerially surveys open-pit mines from altitudes of 80-120 meters, producing digital elevation models with 5-centimeter (50-millimeter) ground sampling distance representing spatial resolution. Mining engineers perform change detection analysis on monthly scans to identify ground movement indicating slope instability, implementing safety measures when displacement exceeds 15 millimeters per week, according to protocols established by Dr. Erik Eberhardt (Professor of Geological Engineering) at the University of British Columbia (Vancouver, Canada) in his 2024 research ‘Remote Sensing for Mine Safety Management.’